Anycast – “Think” before you talk – Part I

INTRODUCTION

How you experience the performance of an application boils down to where you stand on the Internet.

Global reachability means everyone can reach everyone, but not everyone gets the same level of service. The map of the Internet has different perspectives for individual users and proximity plays a significant role in users’ experience.

Why can’t everyone everywhere have the same level of service and why do we need optimisations in the first place? Mainly, it boils down to the protocols used for reachability and old congestion management techniques. The Internet comprises of old protocols that were never designed with performance in mind. As a result, there are certain paths we must take to overcome its shortcomings.

PERFORMANCES CHALLENGES

The primary challenge arises from how Transmission Control Protocol (TCP) operates under both normal conditions and stress once the inbuilt congestion control mechanisms kick-in.

Depending on configuration, it could take anything up to 3 – 5 RTT to send data back to the client. Also, the congestion control mechanisms of TCP only allows 10 segments to be sent with 1 RTT, increasing after that.

Unfortunately, this is the basis for congestion control on the Internet, which hinders application performance, especially for those with large payloads.

HELP AT HAND

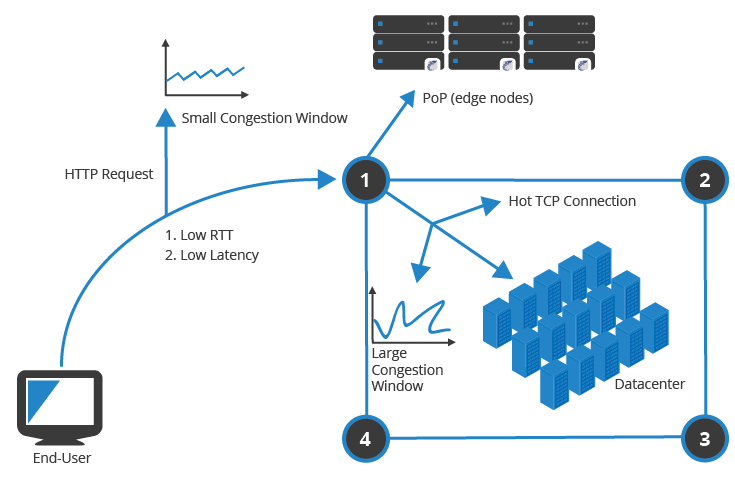

There are a couple of things we can engineer to help this. The main one is to move content closer to users by rolling out edge nodes (PoPs) that proxy requests and cache static content. Edge nodes increase client side performance as all connections terminate close to the users. In simplistic terms, the closer you are to the content the better the performance.

Other engineering tricks involve tweaking how TCP operates. This works to a degree, making bad less bad or good better but it doesn’t necessarily shift a bad connection to a good one.

The correct peering and transits play a role. Careful selection based on population and connectivity density are key factors. Essentially, optimum performance is down to many factors used together in conjunction with reducing the latency as much as possible.

PoP locations, peerings, transits with all available optimisations are only part of the puzzle. The next big question is how do we get users to the right PoP location? And with failure events how efficiently do users fail to alternative locations?

In theory, we have two main options for PoP selection;

a) Traditional DNS based load balancing,

b) Anycast.

Before we address these mechanisms, lets dig our understanding deeper into some key CDN technologies to understand better which network type is optimal.

Initially, we had web servers in central locations servicing content locally. As users became more dispersed so did the need for content. You cannot have the same stamp for the entire world! There needs to be some network segregation which gives rise to edge nodes or PoPs placed close to the user.

WHAT POPS SOLVE

Employing a PoP decrease the connection time as we are terminating the connection at the local PoP. When the client sends an HTTP GET request the PoP sends to the data centre over an existing hot TCP connection. The local PoP and central data centre are continually taking, so the congestion control windows are high, allowing even a 1MB of data to be sent in one RTT. This greatly improves application performance and the world without PoPs would be a pretty slow one.

Selecting the right locations for PoP infrastructure plays an important role in overall network performance and user experience. The most important site selection criteria are to go where the eyeball networks are. You should always try to maximise the number of eyeball networks you are close to when you roll out a PoP. As a result, two fundamental aspects come to play – both physical and topological distance.

Well advanced countries, have well-advanced strategies for peering while others are not so lucky with less peering diversity due to size or government control. An optimum design has large population centers with high population and connectivity densities. With power and space being a secondary concern, diverse connectivity is King when it comes to selecting the right PoP location.

New Architectures

If you were to build a Content Delivery Network Ten years ago, the design would consist of heavy physical load balancers and separate appliance devices to terminate Secure Sockets Layer (SSL). The current best practice architecture has moved away from this and it’s now all about lots of RAM, SSD and high-performance CPU piled into compute nodes. Modern CPU’s are just as good at calculating SSL and it’s cleaner to terminate everything at a server level rather than terminate on costly dedicated appliances.

CacheFly pushes network complexities to their high performing servers and run equal cost multipath (ECMP) right to the host level. Pushing complexity to the edge of the network is the only way to scale and reduce central state. ECMP right down to the host, gives you a routerless design and gets rid of centralised load balancers, allowing to load balance incoming requests in hardware on the Layer 3 switch and then perform the TCP magic on the host.

CacheFly operates a Route Reflector design consisting of iBGP internally and eBGP to the WAN.

FORGET ABOUT STATE

ECMP designs are not concerned with scaling an appliance that has lots of state. Devices with state are always hard to scale and load balancing with pure IP is so much easier. It allows you do to the inbound load balancing in hardware without the high-costs and operational complexities of multiple load balancer and high cost routers. With the new architectures, everything looks like an IP Packet and all switches forward this in hardware. Also, there usually needs to be two appliances for redundancy and also some additional spares in stock, just in case. Costly physical appliances sitting idle in a warehouse is good for no one.

We already touched on the methods to get clients to the PoP both traditional DNS based load balancing and Anycast. Anycast is now deemed a superior option but in the past has met some barriers. Anycast has been popular in the UDP world and now offers the same benefits to TCP-based application. But there has been some barriers to adoption mainly down to inaccurate information and lack of testing.

BARRIERS TO TCP ANYCAST

The biggest problem for TCP/Anycast is not route convergence and application timeouts; it’s that most people think it doesn’t work. People believe that they know without knowing the facts or putting anything into practise to get those facts.

If you have haven’t tested, then you shouldn’t talk or if you have used it and experienced problems, let’s talk. People think that routes are going to converge quickly and always bounce between multiple locations, causing TCP resets. This doesn’t happen as much as you think but it’s much worse when Anycast is not used.

There is a perception that the Internet end-to-end, is an awful place. While there are many challenges, it’s not as bad you might think, especially if the application is built correctly. The Internet is never fully converged, but is this a major problem? If we have deployed an Anycast network how often would the Anycast IP from a given PoP change? – almost never.

The Internet may not have a steady state but what does change is the 1) association of prefix to Autonomous System (AS) and 2) peering between the AS. Two factors that may lead to best path selection. As a result, we need reliable peering relationships, but this is nothing to do with the Anycast Unicast debate.

BUILDING BETTER NETWORKS

Firstly, we need to agree there is no global licence to rule to network designing and the creative ART of networking comes to play with all Service Provider designs. While SP networks offer similar connectivity goals, each and every SP network is configured and designed differently. Some with per-packet load balancing, but most with not. But we as a network design community are rolling out better networks.

There will always be the individual ART to network design unless we fully automate the entire process from design to device configurations which will not happen on a global scale anytime soon. There are so many ways and approaches to network design, but as a consensus, we are building and operating better networks. The modern Internet, a network that never fully converges, is overall pretty stable.

Nowadays, we are building better networks. We are forced to do so as networks are crucial to service delivery. If the network is down or not performing adequately, the services that run on top are useless. The pressure has forced engineers to design with a business orientated approach to networking, with the introduction of automation as an integral part to overlay network stability.

New Tools

In the past, we had primitive debugging and troubleshooting tools; PING and Traceroute most widely used. Both are crude ways to measure performance and only tell administrators if something is “really” broken. Today, we have an entirely new range of telemetry systems at our disposal that inform administrators where the asymmetrical paths are and overlay network performance based on numerous Real User Monitoring (RUM) metrics.

Continue Reading Anycast – “Think” before you talk – Part II

This guest contribution is written by Matt Conran, Network Architect for Network Insight. Matt Conran has more than 17 years of networking industry with entrepreneurial start-ups, government organisations and others. He is a lead Network Architect and successfully delivered major global green field service provider and data centre networks.

Image Credit: Pexels

Product Updates

Explore our latest updates and enhancements for an unmatched CDN experience.

Book a Demo

Discover the CacheFly difference in a brief discussion, getting answers quickly, while also reviewing customization needs and special service requests.

Free Developer Account

Unlock CacheFly’s unparalleled performance, security, and scalability by signing up for a free all-access developer account today.

CacheFly in the News

Learn About

Work at CacheFly

We’re positioned to scale and want to work with people who are excited about making the internet run faster and reach farther. Ready for your next big adventure?